! [ -e /content ] && pip install -Uqq xcube # upgrade xcube on colabTraining an XML Text Classifier

from fastai.text.all import *

from xcube.text.all import *

from fastai.metrics import accuracy # there's an 'accuracy' metric in xcube as well (Deb fix name conflict later)Make sure we have that “beast”:

ic(torch.cuda.get_device_name(default_device()));

test_eq(torch.cuda.get_device_name(0), torch.cuda.get_device_name(default_device()))

test_eq(default_device(), torch.device(0))

print(f"GPU memory = {torch.cuda.get_device_properties(default_device()).total_memory/1024**3}GB")ic| torch.cuda.get_device_name(default_device()): 'Quadro RTX 8000'GPU memory = 44.99969482421875GBsource = untar_xxx(XURLs.MIMIC3)

source_l2r = untar_xxx(XURLs.MIMIC3_L2R)Setting some environment variables:

os.environ['CUDA_LAUNCH_BLOCKING'] = "1"Setting defaults for pandas and matplotlib:

# Set the default figure size

plt.rcParams["figure.figsize"] = (8, 4)Altering some default jupyter settings:

from IPython.core.interactiveshell import InteractiveShell

# InteractiveShell.ast_node_interactivity = "last" # "all"DataLoaders for the Language Model

To be able to use Transfer Learning, first we need to fine-tune our Language Model (which we pretrained on Wikipedia) on the corpus of Wiki-500k (the one we downloaded). Here we will build the DataLoaders object using fastai’s DataBlock API:

data = source/'mimic3-9k.csv'

!head -n 1 {data}subject_id,hadm_id,text,labels,length,is_validdf = pd.read_csv(data,

header=0,

names=['subject_id', 'hadm_id', 'text', 'labels', 'length', 'is_valid'],

dtype={'subject_id': str, 'hadm_id': str, 'text': str, 'labels': str, 'length': np.int64, 'is_valid': bool})

len(df)52726df[['text', 'labels']] = df[['text', 'labels']].astype(str)Let’s take a look at the data:

df.head(3)| subject_id | hadm_id | text | labels | length | is_valid | |

|---|---|---|---|---|---|---|

| 0 | 86006 | 111912 | admission date discharge date date of birth sex f service surgery allergies patient recorded as having no known allergies to drugs attending first name3 lf chief complaint 60f on coumadin was found slightly drowsy tonight then fell down stairs paramedic found her unconscious and she was intubated w o any medication head ct shows multiple iph transferred to hospital1 for further eval major surgical or invasive procedure none past medical history her medical history is significant for hypertension osteoarthritis involving bilateral knee joints with a dependence on cane for ambulation chronic... | 801.35;348.4;805.06;807.01;998.30;707.24;E880.9;427.31;414.01;401.9;V58.61;V43.64;707.00;E878.1;96.71 | 230 | False |

| 1 | 85950 | 189769 | admission date discharge date service neurosurgery allergies sulfa sulfonamides attending first name3 lf chief complaint cc cc contact info major surgical or invasive procedure none history of present illness hpi 88m who lives with family had fall yesterday today had decline in mental status ems called pt was unresponsive on arrival went to osh head ct showed large r sdh pt was intubated at osh and transferred to hospital1 for further care past medical history cad s p mi in s p cabg in ventricular aneurysm at that time cath in with occluded rca unable to intervene chf reported ef 1st degre... | 852.25;E888.9;403.90;585.9;250.00;414.00;V45.81;96.71 | 304 | False |

| 2 | 88025 | 180431 | admission date discharge date date of birth sex f service surgery allergies no known allergies adverse drug reactions attending first name3 lf chief complaint s p fall major surgical or invasive procedure none history of present illness 45f etoh s p fall from window at feet found ambulating and slurring speech on scene intubated en route for declining mental status in the er the patient was found to be bradycardic to the s with bp of systolic she was given atropine dilantin and was started on saline past medical history unknown social history unknown family history unknown physical exam ex... | 518.81;348.4;348.82;801.25;427.89;E882;V49.86;305.00;96.71;38.93 | 359 | False |

We will now create the DataLoaders using DataBlock API:

dls_lm = DataBlock(

blocks = TextBlock.from_df('text', is_lm=True),

get_x = ColReader('text'),

splitter = RandomSplitter(0.1)

).dataloaders(df, bs=384, seq_len=80)For the backward LM:

dls_lm_r = DataBlock(

blocks = TextBlock.from_df('text', is_lm=True, backwards=True),

get_x = ColReader('text'),

splitter = RandomSplitter(0.1)

).dataloaders(df, bs=384, seq_len=80)Let’s take a look at the batches:

dls_lm.show_batch(max_n=2)| text | text_ | |

|---|---|---|

| 0 | xxbos admission date discharge date date of birth sex m history of present illness first name8 namepattern2 known lastname is the firstborn of triplets at week gestation born to a year old gravida para woman immune rpr non reactive hepatitis b surface antigen negative group beta strep status unknown this was an intrauterine insemination achieved pregnancy the pregnancy was uncomplicated until when mother was admitted for evaluation of pregnancy induced hypertension she was treated with betamethasone and discharged home she | admission date discharge date date of birth sex m history of present illness first name8 namepattern2 known lastname is the firstborn of triplets at week gestation born to a year old gravida para woman immune rpr non reactive hepatitis b surface antigen negative group beta strep status unknown this was an intrauterine insemination achieved pregnancy the pregnancy was uncomplicated until when mother was admitted for evaluation of pregnancy induced hypertension she was treated with betamethasone and discharged home she was |

| 1 | occluded rca with l to r collaterals the femoral sheath was sewn in started on heparin and transferred to hospital1 for surgical revascularization past medical history coronary artery diease s p myocardial infarction s p pci to rca subarachnoid hemorrhage secondary to streptokinase hypertension hyperlipidemia hiatal hernia gastritis depression reactive airway disease s p ppm placement for 2nd degree av block social history history of smoking having quit in with a pack year history family history strong family history of | rca with l to r collaterals the femoral sheath was sewn in started on heparin and transferred to hospital1 for surgical revascularization past medical history coronary artery diease s p myocardial infarction s p pci to rca subarachnoid hemorrhage secondary to streptokinase hypertension hyperlipidemia hiatal hernia gastritis depression reactive airway disease s p ppm placement for 2nd degree av block social history history of smoking having quit in with a pack year history family history strong family history of premature |

dls_lm_r.show_batch(max_n=2)| text | text_ | |

|---|---|---|

| 0 | sb west d i basement name first doctor bldg name ward hospital lm lf name3 first lf name last name last doctor d i 30a visit fellow sb west d i name first doctor name last doctor d i 30p visit attending opat appointments up follow disease infectious garage name ward hospital parking best east campus un location ctr clinical name ward hospital sc building fax telephone md namepattern4 name last pattern1 name name11 first with am at wednesday when | west d i basement name first doctor bldg name ward hospital lm lf name3 first lf name last name last doctor d i 30a visit fellow sb west d i name first doctor name last doctor d i 30p visit attending opat appointments up follow disease infectious garage name ward hospital parking best east campus un location ctr clinical name ward hospital sc building fax telephone md namepattern4 name last pattern1 name name11 first with am at wednesday when services |

| 1 | bare and catheterization cardiac a for hospital1 to flown were you infarction myocardial or attack heart a and pain chest had you instructions discharge independent ambulatory status activity interactive and alert consciousness of level coherent and clear status mental condition discharge hyperglycemia hyperlipidemia hypertension infarction myocardial inferior acute diagnosis discharge home disposition discharge refills tablets disp pain chest for needed as year month of total a for minutes every sublingual tablet one sig sublingual tablet mg nitroglycerin day a once | and catheterization cardiac a for hospital1 to flown were you infarction myocardial or attack heart a and pain chest had you instructions discharge independent ambulatory status activity interactive and alert consciousness of level coherent and clear status mental condition discharge hyperglycemia hyperlipidemia hypertension infarction myocardial inferior acute diagnosis discharge home disposition discharge refills tablets disp pain chest for needed as year month of total a for minutes every sublingual tablet one sig sublingual tablet mg nitroglycerin day a once po |

The length of our vocabulary is:

len(dls_lm.vocab)57376Let’s take a look at some words of the vocab:

print(coll_repr(L(dls_lm.vocab), 30))(#57376) ['xxunk','xxpad','xxbos','xxeos','xxfld','xxrep','xxwrep','xxup','xxmaj','the','and','to','of','was','with','a','on','in','for','mg','no','tablet','patient','is','he','at','blood','name','po','she'...]Creating the DataLaoders takes some time, so smash that save button (also a good idea to save the dls_lm.vocab for later use) if you are working on your own dataset. In this case though untar_xxx has got it for you:

print("\n".join(L(source.glob("**/*dls*lm*.pkl")).map(str))) # the ones with _r are for the reverse language model/home/deb/.xcube/data/mimic3/mimic3-9k_dls_lm.pkl

/home/deb/.xcube/data/mimic3/mimic3-9k_dls_lm_vocab_r.pkl

/home/deb/.xcube/data/mimic3/mimic3-9k_dls_lm_vocab.pkl

/home/deb/.xcube/data/mimic3/mimic3-9k_dls_lm_r.pkl

/home/deb/.xcube/data/mimic3/mimic3-9k_dls_lm_old.pklTo load back the dls_lm later on:

dls_lm = torch.load(source/'mimic3-9k_dls_lm.pkl')dls_lm_r = torch.load(source/'mimic3-9k_dls_lm_r.pkl')Learner for the Language Model Fine-Tuning:

learn = language_model_learner(

dls_lm, AWD_LSTM, drop_mult=0.3,

metrics=[accuracy, Perplexity()]).to_fp16()And, one more for the reverse:

learn_r = language_model_learner(

dls_lm_r, AWD_LSTM, drop_mult=0.3, backwards=True,

metrics=[accuracy, Perplexity()]).to_fp16()Training a language model on the full datset takes a lot of time. So you can train one on a tiny dataset for illustration. Or you can skip the training and just load up the one that’s pretrained and downloaded by untar_xxx and just do the validation.

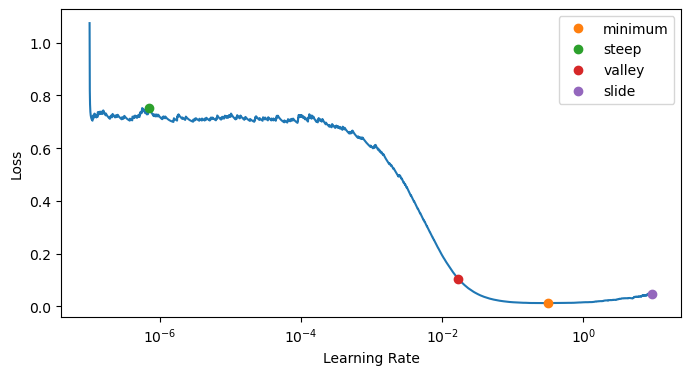

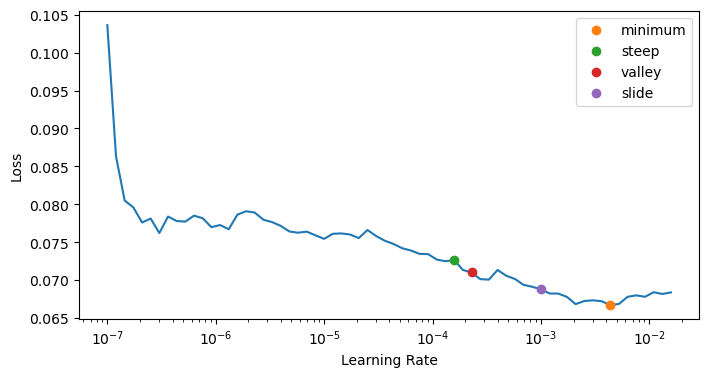

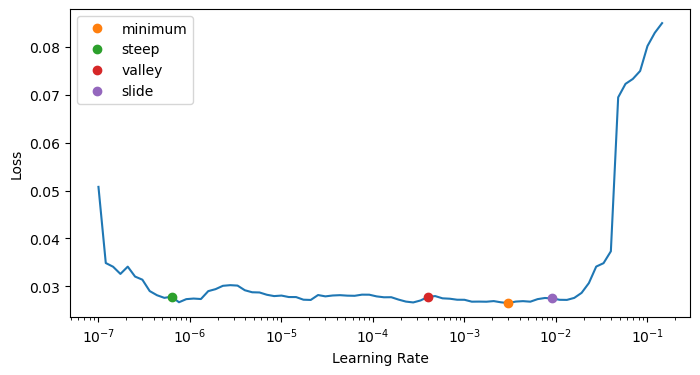

Let’s compute the learning rate using the lr_find:

lr_min, lr_steep, lr_valley, lr_slide = learn.lr_find(suggest_funcs=(minimum, steep, valley, slide))

lr_min, lr_steep, lr_valley, lr_slidelearn.fit_one_cycle(1, lr_min)| epoch | train_loss | valid_loss | accuracy | perplexity | time |

|---|---|---|---|---|---|

| 0 | 3.646323 | 3.512013 | 0.382642 | 33.515659 | 2:27:57 |

It takes quite a while to train each epoch, so we’ll be saving the intermediate model results during the training process:

Since we have completed the initial training, we will now continue fine-tuning the model after unfreezing:

learn.unfreeze()and run lr_find again, because we now have more layers to train, and the last layers weight have already been trained for one epoch:

lr_min, lr_steep, lr_valley, lr_slide = learn.lr_find(suggest_funcs=(minimum, steep, valley, slide))

lr_min, lr_steep, lr_valley, lr_slideLet’s now traing with a suitable learning rate:

learn.fit_one_cycle(10, lr_max=2e-3, cbs=SaveModelCallback(fname='lm'))Note: Make sure if you have trained the most recent language model Learner for more epochs (then you need to save that version)

Here you can load the pretrained language model which untar_xxx downloaded:

learn = learn.load(source/'mimic3-9k_lm')learn_r = learn_r.load(source/'mimic3-9k_lm_r')Let’s validate the Learner to make sure we loaded the correct version:

learn.validate()(#3) [2.060459852218628,0.5676611661911011,7.849578857421875]and the reverse…

learn_r.validate()(#3) [2.101907968521118,0.5691556334495544,8.18176555633545]Saving the encoder of the Language Model

Crucial: Once we have trained our LM we will save all of our model except the final layer that converts activation to probabilities of picking each token in our vocabulary. The model not including the final layer has a sexy name - encoder. We will save it using save_encoder method of the Learner:

# learn.save_encoder('lm_finetuned')

# learn_r.save_encoder('lm_finetuned_r')Saving the decoder of the Language Model

learn.save_decoder('mimic3-9k_lm_decoder')

learn_r.save_decoder('mimic3-9k_lm_decoder_r')This completes the second stage of the text classification process - fine-tuning the Language Model pretrained on Wikipedia corpus. We will now use it to fine-tune a text multi-label text classifier.

DataLoaders for the Multi-Label Classifier (using fastai’s Mid-Level Data API)

Fastai’s midlevel data api is the swiss army knife of data preprocessing. Detailed tutorials can be found here intermediate, advanced. We will use it here to create our dataloaders for the classifier.

Loading Raw Data

data = source/'mimic3-9k.csv'

!head -n 1 {data}subject_id,hadm_id,text,labels,length,is_valid# !shuf -n 200000 {data} > {data_sample}df = pd.read_csv(data,

header=0,

names=['subject_id', 'hadm_id', 'text', 'labels', 'length', 'is_valid'],

dtype={'subject_id': str, 'hadm_id': str, 'text': str, 'labels': str, 'length': np.int64, 'is_valid': bool})df[['text', 'labels']] = df[['text', 'labels']].astype(str)df.head(3)| subject_id | hadm_id | text | labels | length | is_valid | |

|---|---|---|---|---|---|---|

| 0 | 86006 | 111912 | admission date discharge date date of birth sex f service surgery allergies patient recorded as having no known allergies to drugs attending first name3 lf chief complaint 60f on coumadin was found slightly drowsy tonight then fell down stairs paramedic found her unconscious and she was intubated w o any medication head ct shows multiple iph transferred to hospital1 for further eval major surgical or invasive procedure none past medical history her medical history is significant for hypertension osteoarthritis involving bilateral knee joints with a dependence on cane for ambulation chronic... | 801.35;348.4;805.06;807.01;998.30;707.24;E880.9;427.31;414.01;401.9;V58.61;V43.64;707.00;E878.1;96.71 | 230 | False |

| 1 | 85950 | 189769 | admission date discharge date service neurosurgery allergies sulfa sulfonamides attending first name3 lf chief complaint cc cc contact info major surgical or invasive procedure none history of present illness hpi 88m who lives with family had fall yesterday today had decline in mental status ems called pt was unresponsive on arrival went to osh head ct showed large r sdh pt was intubated at osh and transferred to hospital1 for further care past medical history cad s p mi in s p cabg in ventricular aneurysm at that time cath in with occluded rca unable to intervene chf reported ef 1st degre... | 852.25;E888.9;403.90;585.9;250.00;414.00;V45.81;96.71 | 304 | False |

| 2 | 88025 | 180431 | admission date discharge date date of birth sex f service surgery allergies no known allergies adverse drug reactions attending first name3 lf chief complaint s p fall major surgical or invasive procedure none history of present illness 45f etoh s p fall from window at feet found ambulating and slurring speech on scene intubated en route for declining mental status in the er the patient was found to be bradycardic to the s with bp of systolic she was given atropine dilantin and was started on saline past medical history unknown social history unknown family history unknown physical exam ex... | 518.81;348.4;348.82;801.25;427.89;E882;V49.86;305.00;96.71;38.93 | 359 | False |

Sample a small fraction of the dataset to ensure quick iteration (Skip this if you want to do this on the full dataset)

# df = df.sample(frac=0.3, random_state=89, ignore_index=True)

# df = df.sample(frac=0.025, random_state=89, ignore_index=True)

df = df.sample(frac=0.005, random_state=89, ignore_index=True)

len(df)264Let’s now gather the labels from the ‘labels’ columns of the df:

lbl_freqs = Counter()

for labels in df.labels: lbl_freqs.update(labels.split(';'))The total number of labels are:

len(lbl_freqs)8922Let’s take a look at the most common labels:

pd.DataFrame(lbl_freqs.most_common(20), columns=['label', 'frequency'])| label | frequency | |

|---|---|---|

| 0 | 401.9 | 20053 |

| 1 | 38.93 | 14444 |

| 2 | 428.0 | 12842 |

| 3 | 427.31 | 12594 |

| 4 | 414.01 | 12179 |

| 5 | 96.04 | 9932 |

| 6 | 96.6 | 9161 |

| 7 | 584.9 | 8907 |

| 8 | 250.00 | 8784 |

| 9 | 96.71 | 8619 |

| 10 | 272.4 | 8504 |

| 11 | 518.81 | 7249 |

| 12 | 99.04 | 7147 |

| 13 | 39.61 | 6809 |

| 14 | 599.0 | 6442 |

| 15 | 530.81 | 6156 |

| 16 | 96.72 | 5926 |

| 17 | 272.0 | 5766 |

| 18 | 285.9 | 5296 |

| 19 | 88.56 | 5240 |

Let’s make a list of all labels (We will use it later while creating the DataLoader)

lbls = list(lbl_freqs.keys())Dataset Statistics (Optional)

Let’s try to understand what captures the hardness of an xml dataset

Check #1: Number of instances (train/valid split)

train, valid = df.index[~df['is_valid']], df.index[df['is_valid']]

len(train), len(valid)(244, 20)Check #2: Avg number of instances per label

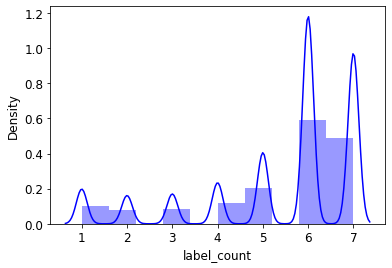

array(list(lbl_freqs.values())).mean()3.341463414634146Check #3: Plotting the label distribution

lbl_count = []

for lbls in df.labels: lbl_count.append(len(lbls.split(',')))df_copy = df.copy()

df_copy['label_count'] = lbl_countdf_copy.head(2)| text | labels | is_valid | label_count | |

|---|---|---|---|---|

| 0 | Methodical Bible study: A new approach to hermeneutics /SEP/ Methodical Bible study: A new approach to hermeneutics. Inductive study compares related Bible texts in order to let the Bible interpret itself, rather than approaching Scripture with predetermined notions of what it will say. Dr. Trainas Methodical Bible Study was not intended to be the last word in inductive Bible study; but since its first publication in 1952, it has become a foundational text in this field. Christian colleges and seminaries have made it required reading for beginning Bible students, while many churches have... | 34141,119299,126600,128716,187372,218742 | False | 6 |

| 1 | Southeastern Mills Roast Beef Gravy Mix, 4.5-Ounce Packages (Pack of 24) /SEP/ Southeastern Mills Roast Beef Gravy Mix, 4.5-Ounce Packages (Pack of 24). Makes 3-1/2 cups. Down home taste. Makes hearty beef stew base. | 465536,465553,615429 | False | 3 |

The average number of labels per instance is:

df_copy.label_count.mean()5.385563363865518import seaborn as sns

sns.distplot(df_copy.label_count, bins=10, color='b');/home/deb/miniconda3/lib/python3.9/site-packages/seaborn/distributions.py:2619: FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms).

warnings.warn(msg, FutureWarning)

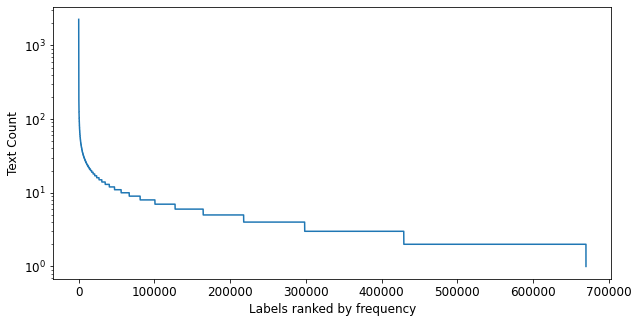

lbls_sorted = sorted(lbl_freqs.items(), key=lambda item: item[1], reverse=True)lbls_sorted[:20][('455619', 2258),

('455662', 2176),

('547041', 2160),

('516790', 1214),

('455712', 1203),

('455620', 1133),

('632786', 1132),

('632789', 1132),

('632785', 1030),

('632788', 1030),

('492255', 938),

('455014', 872),

('670034', 850),

('427871', 815),

('599701', 803),

('308331', 801),

('581325', 801),

('649272', 799),

('455704', 762),

('666760', 733)]ranked_lbls = L(lbls_sorted).itemgot(0)

ranked_freqs = L(lbls_sorted).itemgot(1)

ranked_lbls, ranked_freqs((#670091) ['455619','455662','547041','516790','455712','455620','632786','632789','632785','632788'...],

(#670091) [2258,2176,2160,1214,1203,1133,1132,1132,1030,1030...])fig = plt.figure(figsize=(10,5))

ax = fig.add_subplot(1,1,1)

ax.plot(ranked_freqs)

ax.set_xlabel('Labels ranked by frequency')

ax.set_ylabel('Text Count')

ax.set_yscale('log');

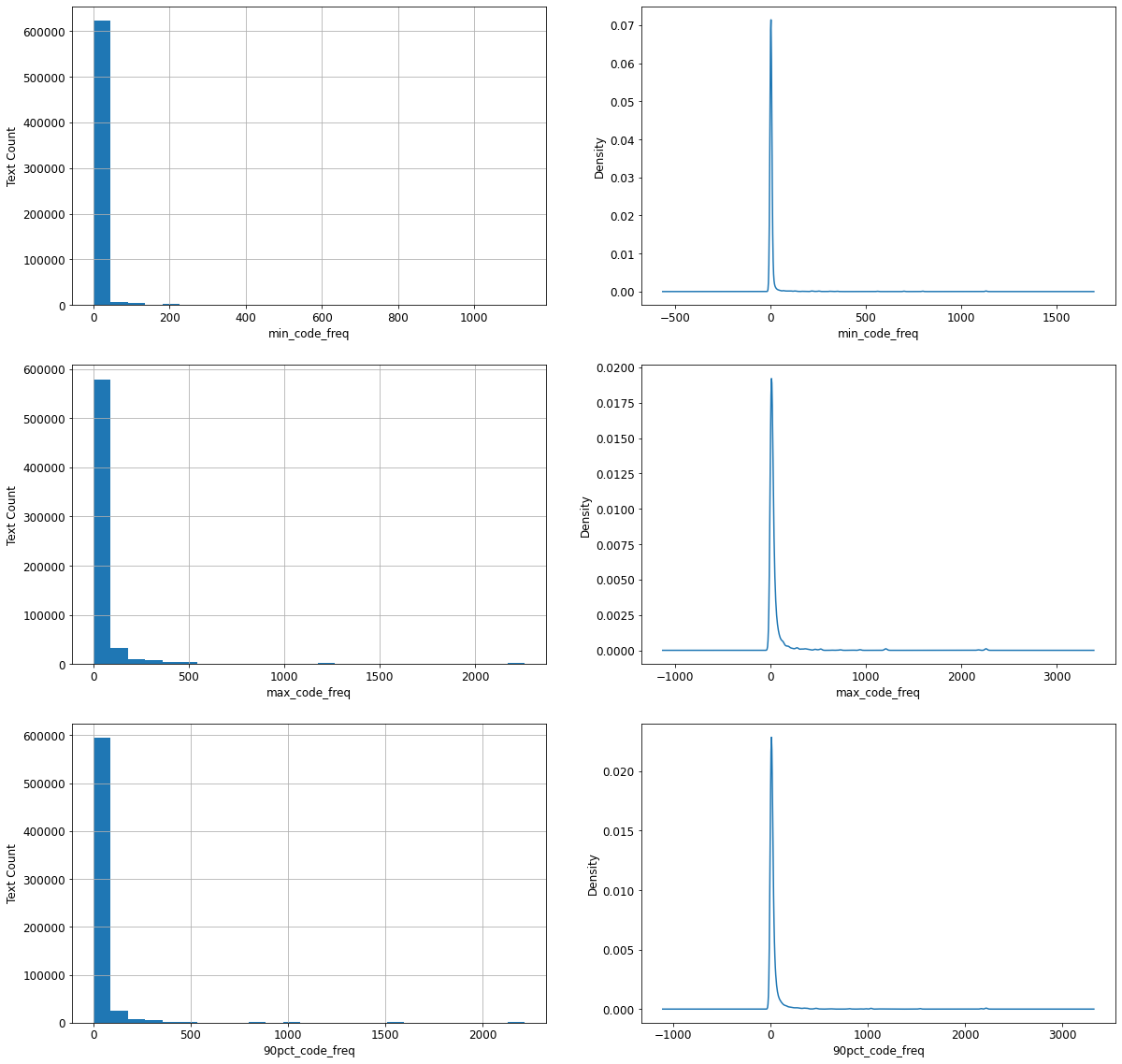

Check #4: Computing the min label freq for each text

df_copy.head(10)| text | labels | is_valid | label_count | min_code_freq | max_code_freq | 90pct_code_freq | |

|---|---|---|---|---|---|---|---|

| 0 | Methodical Bible study: A new approach to hermeneutics /SEP/ Methodical Bible study: A new approach to hermeneutics. Inductive study compares related Bible texts in order to let the Bible interpret itself, rather than approaching Scripture with predetermined notions of what it will say. Dr. Trainas Methodical Bible Study was not intended to be the last word in inductive Bible study; but since its first publication in 1952, it has become a foundational text in this field. Christian colleges and seminaries have made it required reading for beginning Bible students, while many churches have... | 34141,119299,126600,128716,187372,218742 | False | 6 | 2 | 29 | 25.5 |

| 1 | Southeastern Mills Roast Beef Gravy Mix, 4.5-Ounce Packages (Pack of 24) /SEP/ Southeastern Mills Roast Beef Gravy Mix, 4.5-Ounce Packages (Pack of 24). Makes 3-1/2 cups. Down home taste. Makes hearty beef stew base. | 465536,465553,615429 | False | 3 | 2 | 3 | 3.0 |

| 2 | MMF Industries 24-Key Portable Zippered Key Case (201502417) /SEP/ MMF Industries 24-Key Portable Zippered Key Case (201502417). The MMF Industries 201502417 24-Key Portable Zippered Key Case is an attractive burgundy-colored leather-like vinyl case with brass corners and looks like a portfolio. Its easy-slide zipper keeps keys enclosed, and a hook-and-loop fastener strips keep keys securely in place. Key tags are included.\tZippered key case offers a portable alternative to metal wall key cabinets. Included key tags are backed by hook-and-loop closures. Easy slide zipper keeps keys enclos... | 393828 | False | 1 | 2 | 2 | 2.0 |

| 3 | Hoover the Fishing President /SEP/ Hoover the Fishing President. Hal Elliott Wert has spent years researching Herbert Hoover, Franklin Roosevelt, and Harry Truman. He holds a Ph.D. from the University of Kansas and currently teaches at the Kansas City Art Institute. | 167614,223686 | False | 2 | 4 | 4 | 4.0 |

| 4 | GeoPuzzle U.S.A. and Canada - Educational Geography Jigsaw Puzzle (69 pcs) /SEP/ GeoPuzzle U.S.A. and Canada - Educational Geography Jigsaw Puzzle (69 pcs). GeoPuzzles are jigsaw puzzles that make learning world geography fun. The pieces of a GeoPuzzle are shaped like individual countries, so children learn as they put the puzzle together. Award-winning Geopuzzles help to build fine motor, cognitive, language, and problem-solving skills, and are a great introduction to world geography for children 4 and up. Designed by an art professor, jumbo sized and brightly colored GeoPuzzles are avail... | 480528,480530,480532,485144,485146,598793 | False | 6 | 5 | 10 | 8.5 |

| 5 | Amazon.com: Paul Fredrick Men's Cotton Pinpoint Oxford Straight Collar Dress Shirt: Clothing /SEP/ Amazon.com: Paul Fredrick Men's Cotton Pinpoint Oxford Straight Collar Dress Shirt: Clothing. Pinpoint Oxford Cotton. Traditional Straight Collar, 1/4 Topstitched. Button Cuffs, 1/4 Topstitched. Embedded Collar Stay. Regular, Big and Tall. Top Center Placket. Split Yoke. Single Front Rounded Pocket. Machine Wash Or Dry Clean. Imported. * Big and Tall Sizes - addl $5.00 | 516790,567615,670034 | False | 3 | 256 | 1214 | 1141.2 |

| 6 | Darkest Fear : A Myron Bolitar Novel /SEP/ Darkest Fear : A Myron Bolitar Novel. Myron Bolitar's father's recent heart attack brings Myron smack into a midlife encounter with issues of adulthood and mortality. And if that's not enough to turn his life upside down, the reappearance of his first serious girlfriend is. The basketball star turned sports agent, who does a little detecting when business is slow, is saddened by the news that Emily Downing's 13-year-old son is dying and desperately needs a bone marrow transplant; even if she did leave him for the man who destroyed his basketball c... | 50442,50516,50647,50672,50680,662538 | False | 6 | 2 | 3 | 2.5 |

| 7 | In Debt We Trust (2007) /SEP/ In Debt We Trust (2007). Just a few decades ago, owing more money than you had in your bank account was the exception, not the rule. Yet, in the last 10 years, consumer debt has doubled and, for the first time, Americans are spending more than they're saving -- or making. This April, award-winning former ABC News and CNN producer Danny Schechter investigates America's mounting debt crisis in his latest hard-hitting expose, IN DEBT WE TRUST. While many Americans are "maxing out" on credit cards, there is a much deeper story: power is shifting into few... | 493095,499382,560691,589867,591343,611615,619231 | False | 7 | 4 | 50 | 47.0 |

| 8 | Craftsman 9-34740 32 Piece 1/4-3/8 Drive Standard/Metric Socket Wrench Set /SEP/ Craftsman 9-34740 32 Piece 1/4-3/8 Drive Standard/Metric Socket Wrench Set. Craftsman 9-34740 32 Pc. 1/4-3/8 Drive Standard/Metric Socket Wrench Set. The Craftsman 9-34740 32 Pc. 1/4-3/8 Drive Standard/Metric Socket Wrench Set includes 1/4 Inch Drive Sockets; 8 Six-Point Standard Sockets:9-43492 7/32-Inch, 9-43496 11/32,9-43493 1/4-Inch, 9-43497 3/8-Inch, 9-43494 9/32-Inch, 9-43498 7/16-Inch,9-43495 5/16-Inch, 9-43499 1/2-Inch; 7 Six-Point Metric Sockets:9-43505 4mm, 9-43504 8mm, 9-43501 5mm, 9-43507 9mm,9-435... | 590298,609038 | False | 2 | 2 | 2 | 2.0 |

| 9 | 2013 Hammer Nutrition Complex Carbohydrate Energy Gel - 12-Pack /SEP/ 2013 Hammer Nutrition Complex Carbohydrate Energy Gel - 12-Pack. Hammer Nutrition made the Complex Carbohydrate Energy Gel with natural ingredients and real fruit, so you won't have those unhealthy insulin-spike sugar highs. Instead, you get prolonged energy levels from the complex carbohydrates and amino acids in this Hammer Gel. The syrup-like consistency makes it easy to drink straight or add to your water. Unlike nutrition bars that freeze on your winter treks or melt during hot adventure races, the Complex Carbohydr... | 453268,453277,510450,516828,520780,542684,634756 | False | 7 | 3 | 11 | 9.2 |

df_copy['min_code_freq'] = df_copy.apply(

lambda row: min([lbl_freqs[lbl] for lbl in row.labels.split(',')]), axis=1)df_copy['max_code_freq'] = df_copy.apply(

lambda row: max([lbl_freqs[lbl] for lbl in row.labels.split(',')]), axis=1)df_copy['90pct_code_freq'] = df_copy.apply(

lambda row: np.percentile([lbl_freqs[lbl] for lbl in row.labels.split(',')], 90), axis=1)fig, axes = plt.subplots(nrows=3, ncols=2, figsize=(20,20))

for freq, axis in zip(['min_code_freq', 'max_code_freq', '90pct_code_freq'], axes):

df_copy[freq].hist(ax=axis[0], bins=25)

axis[0].set_xlabel(freq)

axis[0].set_ylabel('Text Count')

df_copy[freq].plot.density(ax=axis[1])

axis[1].set_xlabel(freq)

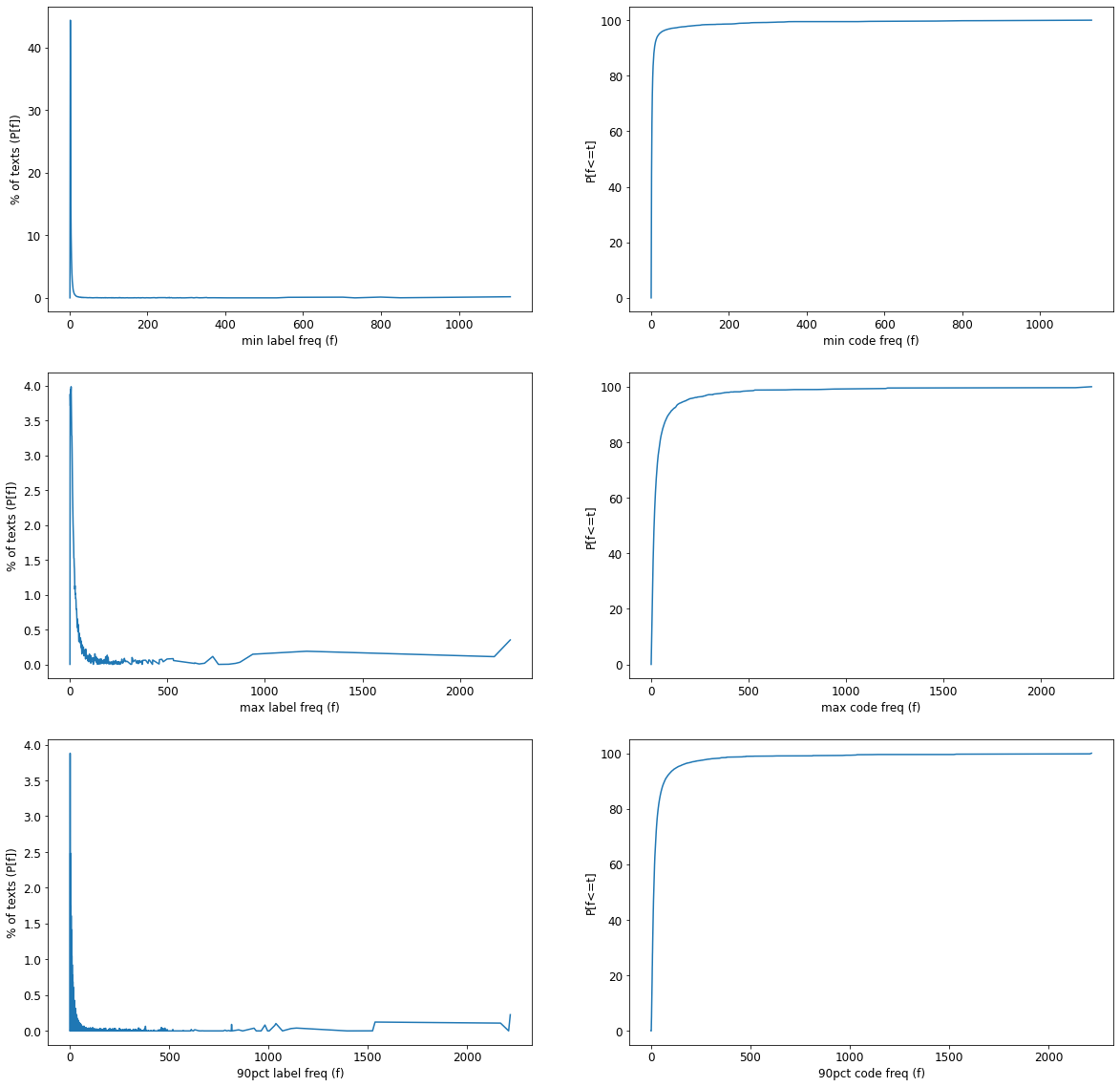

min_code_freqs = Counter(df_copy['min_code_freq'])

max_code_freqs = Counter(df_copy['max_code_freq'])

nintypct_code_freqs = Counter(df_copy['90pct_code_freq'])total_notes = L(min_code_freqs.values()).sum()

total_notes643474for kmin in min_code_freqs:

min_code_freqs[kmin] = (min_code_freqs[kmin]/total_notes) * 100

for kmax in max_code_freqs:

max_code_freqs[kmax] = (max_code_freqs[kmax]/total_notes) * 100

for k90pct in nintypct_code_freqs:

nintypct_code_freqs[k90pct] = (nintypct_code_freqs[k90pct]/total_notes) * 100min_code_freqs = dict(sorted(min_code_freqs.items(), key=lambda item: item[0]))

max_code_freqs = dict(sorted(max_code_freqs.items(), key=lambda item: item[0]))

nintypct_code_freqs = dict(sorted(nintypct_code_freqs.items(), key=lambda item: item[0]))fig, axes = plt.subplots(nrows=3, ncols=2, figsize=(20,20))

for axis, freq_dict, label in zip(axes, (min_code_freqs, max_code_freqs, nintypct_code_freqs), ('min', 'max', '90pct')):

axis[0].plot(freq_dict.keys(), freq_dict.values())

axis[0].set_xlabel(f"{label} label freq (f)")

axis[0].set_ylabel("% of texts (P[f])");

axis[1].plot(freq_dict.keys(), np.cumsum(list(freq_dict.values())))

axis[1].set_xlabel(f"{label} code freq (f)")

axis[1].set_ylabel("P[f<=t]");

Steps for creating the classifier DataLoaders using fastai’s Transforms:

1. train/valid splitter:

Okay, based on the is_valid column of our Dataframe, let’s create a splitter:

def splitter(df):

train = df.index[~df['is_valid']].tolist()

valid = df.index[df['is_valid']].to_list()

return train, validLet’s check the train/valid split

splits = [train, valid] = splitter(df)

L(splits[0]), L(splits[1])((#49354) [0,1,2,3,4,5,6,7,8,9...],

(#3372) [1631,1632,1633,1634,1635,1636,1637,1638,1639,1640...])2. Making the Datasets object:

Crucial: We need the vocab of the language model so that we can make sure we use the same correspondence of token to index. Otherwise, the embeddings we learned in our fine-tuned language model won’t make any sense to our classifier model, and the fine-tuning won’t be of any use. So we need to pass the lm_vocab to the Numericalize transform:

So let’s load the vocab of the language model:

lm_vocab = torch.load(source/'mimic3-9k_dls_lm_vocab.pkl')

lm_vocab_r = torch.load(source/'mimic3-9k_dls_lm_vocab_r.pkl')all_equal(lm_vocab, lm_vocab_r)

L(lm_vocab)(#57376) ['xxunk','xxpad','xxbos','xxeos','xxfld','xxrep','xxwrep','xxup','xxmaj','the'...]x_tfms = [Tokenizer.from_df('text'), attrgetter("text"), Numericalize(vocab=lm_vocab)]

x_tfms_r = [Tokenizer.from_df('text', ), attrgetter("text"), Numericalize(vocab=lm_vocab), reverse_text]

y_tfms = [ColReader('labels', label_delim=';'), MultiCategorize(vocab=lbls), OneHotEncode()]

tfms = [x_tfms, y_tfms]

tfms_r = [x_tfms_r, y_tfms]dsets = Datasets(df, tfms, splits=splits)CPU times: user 17.9 s, sys: 3.27 s, total: 21.2 s

Wall time: 2min 38sdsets_r = Datasets(df, tfms_r, splits=splits)CPU times: user 18.2 s, sys: 4.67 s, total: 22.9 s

Wall time: 2min 54sLet’s now check if our Datasets got created alright:

len(dsets.train), len(dsets.valid)(49354, 3372)Let’s check a random data point:

idx = random.randint(0, len(dsets))

x = dsets.train[idx]

assert isinstance(x, tuple) # tuple if independent and dependent variable

x_r = dsets_r.train[idx]dsets.decode(x)('xxbos admission date discharge date date of birth sex f service medicine allergies nsaids aspirin influenza virus vaccine attending first name3 lf chief complaint hypotension resp distress major surgical or invasive procedure rij placement and removal last name un gastric tube placement and removal rectal tube placement and removal picc line placement and removal history of present illness ms known lastname is a 50f with cirrhosis of unproven etiology h o perforated duodenal ulcer who has had weakness and back pain with cough and shortness of breath for days she has chronic low back pain but this is in a different location she also reports fever and chills she reports increasing weakness and fatigue over the same amount of time she is followed by vna who saw her at home and secondary to hypotension and hypoxia requested she go to ed in the ed initial vs were afebrile and o2 sat unable to be read ct of torso showed pneumonia but no abdominal pathology she was seen by surgery she was found to be significantly hypoxic and started on nrb a right ij was placed she was started on levophed after a drop in her pressures in the micu she is conversant and able to tell her story past medical history asthma does not use inhalers htn off meds for several years rheumatoid arthritis seronegative chronic severe back pain s p c sections history of secondary syphilis treated polysubstance abuse notably cocaine but no drug or alcohol use for at least past months depression pulmonary hypertension severe on cardiac cath restrictive lung disease seizures in childhood cirrhosis by liver biopsy etiology as yet unknown duodenal ulcer s p surgical repair xxunk location un in summer social history lives with boyfriend who helps in her healthcare has children past h o cocaine abuse but not current denies any etoh drug or tobacco use since cirrhosis diagnosis in month only previously smoked up to pack per wk family history noncontributory physical exam on admission t hr bp r o2 sat on nrb general alert oriented moderate respiratory distress heent sclera anicteric drymm oropharynx clear neck supple jvp not elevated no lad lungs rhonchi and crackles in bases xxrep 3 l with egophany increased xxunk cv tachy rate and rhythm normal s1 s2 no murmurs rubs gallops abdomen soft diffusely tender non distended bowel sounds present healing midline ex lap wound dehiscence on caudal end but without erythema drainage ext warm well perfused pulses no clubbing cyanosis or edema pertinent results admission labs 13pm blood wbc rbc hgb hct mcv mch mchc rdw plt ct 13pm blood neuts bands lymphs monos eos baso 13pm blood pt ptt inr pt 13pm blood plt smr high plt ct 13pm blood glucose urean creat na k cl hco3 angap 13pm blood alt ast ld ldh alkphos amylase totbili 22am blood ck mb ctropnt 42pm blood probnp numeric identifier 13pm blood albumin calcium phos mg 22am blood cortsol 28pm blood type art o2 flow po2 pco2 ph caltco2 base xs intubat not intuba vent spontaneou comment non rebrea 20pm blood lactate discharge labs 58am blood wbc rbc hgb hct mcv mch mchc rdw plt ct 58am blood plt ct 58am blood pt ptt inr pt 58am blood glucose urean creat na k cl hco3 angap 58am blood alt ast ld ldh alkphos totbili 58am blood calcium phos mg imaging ct chest abd pelvis impression no etiology for abdominal pain identified no free air is noted within the abdomen interval increase in diffuse anasarca and bilateral pleural effusions small on the right and small to moderate on the left compressive atelectasis and new bilateral lower lobe pneumonias interval improvement in the degree of mediastinal lymphadenopathy interval development of small pericardial effusion no ct findings to suggest tamponade tte the left atrium is normal in size there is mild symmetric left ventricular hypertrophy with normal cavity size and regional global systolic function lvef the estimated cardiac index is borderline low 5l min m2 tissue doppler imaging suggests a normal left ventricular filling pressure pcwp 12mmhg the right ventricular free wall is hypertrophied the right ventricular cavity is markedly dilated with severe global free wall hypokinesis there is abnormal septal motion position consistent with right ventricular pressure volume overload the aortic valve leaflets appear structurally normal with good leaflet excursion and no aortic regurgitation the mitral valve leaflets are structurally normal mild mitral regurgitation is seen the tricuspid valve leaflets are mildly thickened there is mild moderate tricuspid xxunk there is severe pulmonary artery systolic hypertension there is a small circumferential pericardial effusion without echocardiographic signs of tamponade compared with the prior study images reviewed of the overall findings are similar ct abd pelvis impression anasarca increased bilateral pleural effusions small to moderate right greater than left trace pericardial fluid increased left lower lobe collapse consolidation right basilar atelectasis has also increased new ascites no free air dilated fluid filled colon and rectum no wall thickening to suggest colitis no evidence of small bowel obstruction bilat upper extremities venous ultrasound impression non occlusive right subclavian deep vein thrombosis cta chest impression no pulmonary embolism to the subsegmental level anasarca moderate to large left and moderate right pleural effusion small pericardial effusion unchanged right ventricle and right atrium enlargement since enlarged main pulmonary artery could be due to pulmonary hypertension complete collapse of the left lower lobe pneumonia can not be ruled out right basilar atelectasis kub impression unchanged borderline distended colon no evidence of small bowel obstruction or ileus brief hospital course this is a 50f with severe pulmonary hypertension h o duodenal perf s p surgical repair cirrhosis of unknown etiology and h o polysubstance abuse who presented with septic shock secondary pneumonia with respiratory distress septic shock pneumonia fever hypotension tachycardia hypoxia leukocytosis with xxrep 3 l infiltrate initially pt was treated for both health care associated pneumonia and possible c diff infection given wbc on admission however c diff treatment was stopped after patient tested negative x3 she completed a day course of vancomycin zosyn on for pneumonia she did initially require non rebreather in the icu but for the last week prior to discharge she has had stable o2 sats to mid s on nasal cannula she did also require pressors for the first several days of this hospitalization but was successfully weaned off pressors approximately wk prior to discharge at the time of discharge she is maintaining stable bp s 100s 110s doppler due to repeated ivf boluses for hypotension during this admission the pt developed anasarca and at the time of discharge is being slowly diuresed with lasix iv daily ogilvies pseudo obstruction the pt experience abd pain and distention with radiologic evidence of dilated loops of small bowel and large bowel during this hospitalization there was never any evidence of a transition point of stricture both a rectal and ngt were placed for decompression the pts symptoms and abd exam improved during the days prior to discharge and at the time of discharge she is tolerating a regular po diet and having normal bowel movements chronic back pain the pt has a long h o chronic back pain she was treated with iv morphine here for acute on chronic back pain likely exacerbated by bed rest and ileus at discharge she was transitioned to po morphine cirrhosis the etiology of this is unknown but the pt has biopsy proven cirrhosis from liver biopsy this likely explains her baseline coagulopathy and hypoalbuminemia here she needs to have outpt f u with hepatology she has no known complications of cirrhosis at this time rue dvt the pt has a rue dvt seen on u s associated with rij cvl which was placed in house she was started on a heparin gtt which was transitioned to coumadin prior to discharge at discharge her inr is she should have follow up lab work on friday of note at discharge the pt had several healing boils on her inner upper thighs thought to be trauma from her foley rubbing against the skin no further treatment was thought to be neccessary for these medications on admission clobetasol cream apply to affected area twice a day as needed gabapentin neurontin mg tablet tablet s by mouth three times a day metoprolol tartrate mg tablet tablet s by mouth twice a day omeprazole mg capsule delayed release e c capsule delayed release e c s by mouth once a day oxycodone mg capsule capsule s by mouth q hours physical therapy dx first name9 namepattern2 location un evaluation and management of motor skills tramadol mg tablet tablet s by mouth twice a day as needed for pain trazodone mg tablet tablet s by mouth hs medications otc docusate sodium mg ml liquid teaspoon by mouth twice a day as needed magnesium oxide mg tablet tablet s by mouth twice a day discharge medications ipratropium bromide solution sig one nebulizer inhalation q6h every hours clobetasol ointment sig one appl topical hospital1 times a day lidocaine mg patch adhesive patch medicated sig one adhesive patch medicated topical qday as needed for back pain please wear on skin hrs then have hrs with patch off hemorrhoidal suppository suppository sig one suppository rectal once a day as needed for pain pantoprazole mg tablet delayed release e c sig one tablet delayed release e c po twice a day outpatient lab work please have you inr checked saturday the results should be sent to your doctor at rehab multivitamin tablet sig one tablet po once a day morphine mg ml solution sig mg po every four hours as needed for pain warfarin mg tablet sig one tablet po once daily at pm discharge disposition extended care facility hospital3 hospital discharge diagnosis healthcare associated pneumonia colonic pseudo obstruction severe pulmonary hypertension cirrhosis htn chronic back pain right upper extremity dvt discharge condition good o2 sat high s on 3l nc bp stable 100s 110s doppler patient tolerating regular diet and having bowel movements not ambulatory discharge instructions you were admitted with a pneumonia which required a stay in our icu but did not require intubation or the use of a breathing tube you did need medications for a low blood pressure for the first several days of your hospitalization while you were here you also had problems with your intestines that caused them to stop working and food to get stuck in your intestines we treated you for this and you are now able to eat and move your bowels you got a blood clot in your arm while you were here we started you on iv medication for this initially but have now transitioned you to oral anticoagulants your doctor will need to monitor the levels of this medication at rehab you will likely need to remain on this medication for months please follow up as below you need to see a hepatologist liver doctor within the next month to follow up on your diagnosis of cirrhosis your list of medications is attached please call your doctor or return to the hospital if you have fevers shortness of breath chest pain abdominal pain vomitting inability to tolerate food or liquids by mouth dizziness or any other concerning symptoms followup instructions primary care first name11 name pattern1 last name namepattern4 md phone telephone fax date time dermatology name6 md name8 md md phone telephone fax date time you need to have your blood drawn to monitor your inr or coumadin level next on saturday the doctor at the rehab with change your coumadin dose accordingly please follow up with a hepatologist liver doctor within month if you would like to make an appointment at our liver center at hospital1 the number is telephone fax please follow up with a pulmonologist about your severe pulmonary hypertension within month if you do not have a pulmonologist and would like to follow up at hospital1 the number is telephone fax completed by',

(#18) ['401.9','38.93','493.90','785.52','995.92','070.54','276.1','038.9','486','518.82'...])dsets.show(x)xxbos admission date discharge date date of birth sex f service medicine allergies nsaids aspirin influenza virus vaccine attending first name3 lf chief complaint hypotension resp distress major surgical or invasive procedure rij placement and removal last name un gastric tube placement and removal rectal tube placement and removal picc line placement and removal history of present illness ms known lastname is a 50f with cirrhosis of unproven etiology h o perforated duodenal ulcer who has had weakness and back pain with cough and shortness of breath for days she has chronic low back pain but this is in a different location she also reports fever and chills she reports increasing weakness and fatigue over the same amount of time she is followed by vna who saw her at home and secondary to hypotension and hypoxia requested she go to ed in the ed initial vs were afebrile and o2 sat unable to be read ct of torso showed pneumonia but no abdominal pathology she was seen by surgery she was found to be significantly hypoxic and started on nrb a right ij was placed she was started on levophed after a drop in her pressures in the micu she is conversant and able to tell her story past medical history asthma does not use inhalers htn off meds for several years rheumatoid arthritis seronegative chronic severe back pain s p c sections history of secondary syphilis treated polysubstance abuse notably cocaine but no drug or alcohol use for at least past months depression pulmonary hypertension severe on cardiac cath restrictive lung disease seizures in childhood cirrhosis by liver biopsy etiology as yet unknown duodenal ulcer s p surgical repair xxunk location un in summer social history lives with boyfriend who helps in her healthcare has children past h o cocaine abuse but not current denies any etoh drug or tobacco use since cirrhosis diagnosis in month only previously smoked up to pack per wk family history noncontributory physical exam on admission t hr bp r o2 sat on nrb general alert oriented moderate respiratory distress heent sclera anicteric drymm oropharynx clear neck supple jvp not elevated no lad lungs rhonchi and crackles in bases xxrep 3 l with egophany increased xxunk cv tachy rate and rhythm normal s1 s2 no murmurs rubs gallops abdomen soft diffusely tender non distended bowel sounds present healing midline ex lap wound dehiscence on caudal end but without erythema drainage ext warm well perfused pulses no clubbing cyanosis or edema pertinent results admission labs 13pm blood wbc rbc hgb hct mcv mch mchc rdw plt ct 13pm blood neuts bands lymphs monos eos baso 13pm blood pt ptt inr pt 13pm blood plt smr high plt ct 13pm blood glucose urean creat na k cl hco3 angap 13pm blood alt ast ld ldh alkphos amylase totbili 22am blood ck mb ctropnt 42pm blood probnp numeric identifier 13pm blood albumin calcium phos mg 22am blood cortsol 28pm blood type art o2 flow po2 pco2 ph caltco2 base xs intubat not intuba vent spontaneou comment non rebrea 20pm blood lactate discharge labs 58am blood wbc rbc hgb hct mcv mch mchc rdw plt ct 58am blood plt ct 58am blood pt ptt inr pt 58am blood glucose urean creat na k cl hco3 angap 58am blood alt ast ld ldh alkphos totbili 58am blood calcium phos mg imaging ct chest abd pelvis impression no etiology for abdominal pain identified no free air is noted within the abdomen interval increase in diffuse anasarca and bilateral pleural effusions small on the right and small to moderate on the left compressive atelectasis and new bilateral lower lobe pneumonias interval improvement in the degree of mediastinal lymphadenopathy interval development of small pericardial effusion no ct findings to suggest tamponade tte the left atrium is normal in size there is mild symmetric left ventricular hypertrophy with normal cavity size and regional global systolic function lvef the estimated cardiac index is borderline low 5l min m2 tissue doppler imaging suggests a normal left ventricular filling pressure pcwp 12mmhg the right ventricular free wall is hypertrophied the right ventricular cavity is markedly dilated with severe global free wall hypokinesis there is abnormal septal motion position consistent with right ventricular pressure volume overload the aortic valve leaflets appear structurally normal with good leaflet excursion and no aortic regurgitation the mitral valve leaflets are structurally normal mild mitral regurgitation is seen the tricuspid valve leaflets are mildly thickened there is mild moderate tricuspid xxunk there is severe pulmonary artery systolic hypertension there is a small circumferential pericardial effusion without echocardiographic signs of tamponade compared with the prior study images reviewed of the overall findings are similar ct abd pelvis impression anasarca increased bilateral pleural effusions small to moderate right greater than left trace pericardial fluid increased left lower lobe collapse consolidation right basilar atelectasis has also increased new ascites no free air dilated fluid filled colon and rectum no wall thickening to suggest colitis no evidence of small bowel obstruction bilat upper extremities venous ultrasound impression non occlusive right subclavian deep vein thrombosis cta chest impression no pulmonary embolism to the subsegmental level anasarca moderate to large left and moderate right pleural effusion small pericardial effusion unchanged right ventricle and right atrium enlargement since enlarged main pulmonary artery could be due to pulmonary hypertension complete collapse of the left lower lobe pneumonia can not be ruled out right basilar atelectasis kub impression unchanged borderline distended colon no evidence of small bowel obstruction or ileus brief hospital course this is a 50f with severe pulmonary hypertension h o duodenal perf s p surgical repair cirrhosis of unknown etiology and h o polysubstance abuse who presented with septic shock secondary pneumonia with respiratory distress septic shock pneumonia fever hypotension tachycardia hypoxia leukocytosis with xxrep 3 l infiltrate initially pt was treated for both health care associated pneumonia and possible c diff infection given wbc on admission however c diff treatment was stopped after patient tested negative x3 she completed a day course of vancomycin zosyn on for pneumonia she did initially require non rebreather in the icu but for the last week prior to discharge she has had stable o2 sats to mid s on nasal cannula she did also require pressors for the first several days of this hospitalization but was successfully weaned off pressors approximately wk prior to discharge at the time of discharge she is maintaining stable bp s 100s 110s doppler due to repeated ivf boluses for hypotension during this admission the pt developed anasarca and at the time of discharge is being slowly diuresed with lasix iv daily ogilvies pseudo obstruction the pt experience abd pain and distention with radiologic evidence of dilated loops of small bowel and large bowel during this hospitalization there was never any evidence of a transition point of stricture both a rectal and ngt were placed for decompression the pts symptoms and abd exam improved during the days prior to discharge and at the time of discharge she is tolerating a regular po diet and having normal bowel movements chronic back pain the pt has a long h o chronic back pain she was treated with iv morphine here for acute on chronic back pain likely exacerbated by bed rest and ileus at discharge she was transitioned to po morphine cirrhosis the etiology of this is unknown but the pt has biopsy proven cirrhosis from liver biopsy this likely explains her baseline coagulopathy and hypoalbuminemia here she needs to have outpt f u with hepatology she has no known complications of cirrhosis at this time rue dvt the pt has a rue dvt seen on u s associated with rij cvl which was placed in house she was started on a heparin gtt which was transitioned to coumadin prior to discharge at discharge her inr is she should have follow up lab work on friday of note at discharge the pt had several healing boils on her inner upper thighs thought to be trauma from her foley rubbing against the skin no further treatment was thought to be neccessary for these medications on admission clobetasol cream apply to affected area twice a day as needed gabapentin neurontin mg tablet tablet s by mouth three times a day metoprolol tartrate mg tablet tablet s by mouth twice a day omeprazole mg capsule delayed release e c capsule delayed release e c s by mouth once a day oxycodone mg capsule capsule s by mouth q hours physical therapy dx first name9 namepattern2 location un evaluation and management of motor skills tramadol mg tablet tablet s by mouth twice a day as needed for pain trazodone mg tablet tablet s by mouth hs medications otc docusate sodium mg ml liquid teaspoon by mouth twice a day as needed magnesium oxide mg tablet tablet s by mouth twice a day discharge medications ipratropium bromide solution sig one nebulizer inhalation q6h every hours clobetasol ointment sig one appl topical hospital1 times a day lidocaine mg patch adhesive patch medicated sig one adhesive patch medicated topical qday as needed for back pain please wear on skin hrs then have hrs with patch off hemorrhoidal suppository suppository sig one suppository rectal once a day as needed for pain pantoprazole mg tablet delayed release e c sig one tablet delayed release e c po twice a day outpatient lab work please have you inr checked saturday the results should be sent to your doctor at rehab multivitamin tablet sig one tablet po once a day morphine mg ml solution sig mg po every four hours as needed for pain warfarin mg tablet sig one tablet po once daily at pm discharge disposition extended care facility hospital3 hospital discharge diagnosis healthcare associated pneumonia colonic pseudo obstruction severe pulmonary hypertension cirrhosis htn chronic back pain right upper extremity dvt discharge condition good o2 sat high s on 3l nc bp stable 100s 110s doppler patient tolerating regular diet and having bowel movements not ambulatory discharge instructions you were admitted with a pneumonia which required a stay in our icu but did not require intubation or the use of a breathing tube you did need medications for a low blood pressure for the first several days of your hospitalization while you were here you also had problems with your intestines that caused them to stop working and food to get stuck in your intestines we treated you for this and you are now able to eat and move your bowels you got a blood clot in your arm while you were here we started you on iv medication for this initially but have now transitioned you to oral anticoagulants your doctor will need to monitor the levels of this medication at rehab you will likely need to remain on this medication for months please follow up as below you need to see a hepatologist liver doctor within the next month to follow up on your diagnosis of cirrhosis your list of medications is attached please call your doctor or return to the hospital if you have fevers shortness of breath chest pain abdominal pain vomitting inability to tolerate food or liquids by mouth dizziness or any other concerning symptoms followup instructions primary care first name11 name pattern1 last name namepattern4 md phone telephone fax date time dermatology name6 md name8 md md phone telephone fax date time you need to have your blood drawn to monitor your inr or coumadin level next on saturday the doctor at the rehab with change your coumadin dose accordingly please follow up with a hepatologist liver doctor within month if you would like to make an appointment at our liver center at hospital1 the number is telephone fax please follow up with a pulmonologist about your severe pulmonary hypertension within month if you do not have a pulmonologist and would like to follow up at hospital1 the number is telephone fax completed by

401.9;38.93;493.90;785.52;995.92;070.54;276.1;038.9;486;518.82;453.8;714.0;571.5;996.74;560.1;96.07;416.0;96.09assert isinstance(dsets.tfms[0], Pipeline) # `Pipeline` of the `x_tfms`

assert isinstance(dsets.tfms[0][0], Tokenizer)

assert isinstance(dsets.tfms[0][1], Transform)

assert isinstance(dsets.tfms[0][2], Numericalize)If we just want to decode the one-hot encoded dependent variable:

_ind, _dep = x

_lbl = dsets.tfms[1].decode(_dep)

test_eq(_lbl, array(lbls)[_dep.nonzero().flatten().int().numpy()])Let’s extract the MultiCategorize transform applied by dsets on the dependent variable so that we can apply it ourselves:

tfm_cat = dsets.tfms[1][1]

test_eq(str(tfm_cat.__class__), "<class 'fastai.data.transforms.MultiCategorize'>")vocab attribute of the MultiCategorize transform stores the category vocabulary. If it was specified from outside (in this case it was) then the MultiCategorize transform will not sort the vocabulary otherwise it will.

test_eq(lbls, tfm_cat.vocab)

test_ne(lbls, sorted(tfm_cat.vocab))test_eq(str(_lbl.__class__), "<class 'fastai.data.transforms.MultiCategory'>")

test_eq(tfm_cat(_lbl), TensorMultiCategory([lbls.index(o) for o in _lbl]))

test_eq(tfm_cat.decode(tfm_cat(_lbl)), _lbl)Let’s check the reverse:

# dsets_r.decode(x_r)# dsets_r.show(x_r)Looks pretty good!

3. Making the DataLoaders object:

We need to pick the sequence length and the batch size (you might have to adjust this depending on you GPU size)

bs, sl = 16, 72We will use the dl_type argument of the DataLoaders. The purpose is to tell DataLoaders to use SortedDL class of the DataLoader, and not the usual one. SortedDL constructs batches by putting samples of roughly the same lengths into batches.

dl_type = partial(SortedDL, shuffle=True)Crucial: - We will use pad_input_chunk because our encoder AWD_LSTM will be wrapped inside SentenceEncoder. - A SenetenceEncoder expects that all the documents are padded, - with most of the padding at the beginning of the document, with each sequence beginning at a round multiple of bptt - and the rest of the padding at the end.

dls_clas = dsets.dataloaders(bs=bs, seq_len=sl,

dl_type=dl_type,

before_batch=pad_input_chunk)For the reverse:

dls_clas_r = dsets_r.dataloaders(bs=bs, seq_len=sl,

dl_type=dl_type,

before_batch=pad_input_chunk)Creating the DataLoaders object takes considerable amount of time, so do save it when working on your dataset. In this though, (as always) untar_xxx downloaded it for you:

!tree -shLD 1 {source} -P *clas*

# or using glob

# L(source.glob("**/*clas*"))/home/deb/.xcube/data/mimic3

├── [192M May 1 2022] mimic3-9k_clas.pth

├── [758K Mar 27 17:59] mimic3-9k_clas_full_vocab.pkl

├── [1.6G Apr 21 18:29] mimic3-9k_dls_clas.pkl

├── [1.6G Apr 5 17:17] mimic3-9k_dls_clas_old_remove_later.pkl

├── [1.6G Apr 21 18:30] mimic3-9k_dls_clas_r.pkl

└── [1.6G Apr 5 17:18] mimic3-9k_dls_clas_r_old_remove_later.pkl

0 directories, 6 filesAside: Some handy linux find tricks: 1. https://stackoverflow.com/questions/18312935/find-file-in-linux-then-report-the-size-of-file-searched 2. https://stackoverflow.com/questions/4210042/how-to-exclude-a-directory-in-find-command

# !find -path ./models -prune -o -type f -name "*caml*" -exec du -sh {} \;

# !find -not -path "./data/*" -type f -name "*caml*" -exec du -sh {} \;

# !find {path_data} -type f -name "*caml*" | xargs du -shIf you want to load the dls for the full dataset:

dls_clas = torch.load(source/'mimic3-9k_dls_clas.pkl')

dls_clas_r = torch.load(source/'mimic3-9k_dls_clas_r.pkl')CPU times: user 13.1 s, sys: 3.16 s, total: 16.2 s

Wall time: 16.6 sLet’s take a look at the data:

# dls_clas.show_batch(max_n=3)# dls_clas_r.show_batch(max_n=3)4. (Optional) Making the DataLoaders using fastai’s DataBlock API:

It’s worth mentioning here that all the steps we performed to create the DataLoaders can be packaged together using fastai’s DataBlock API.

dblock = DataBlock(

blocks = (TextBlock.from_df('text', seq_len=sl, vocab=lm_vocab), MultiCategoryBlock(vocab=lbls)),

get_x = ColReader('text'),

get_y = ColReader('labels', label_delim=';'),

splitter = splitter,

dl_type = dl_type,

)dls_clas = dblock.dataloaders(df, bs=bs, before_batch=pad_input_chunk)dls_clas.show_batch(max_n=5)Learner for Multi-Label Classifier Fine-Tuning

# set_seed(897997989, reproducible=True)

# set_seed(67, reproducible=True)

set_seed(1, reproducible=True)This is where we have dls_clas(for the full dataset) we made in the previous section:

!tree -shDL 1 {source} -P "*clas*"

# or using glob

# L(source.glob("**/*clas*"))/home/deb/.xcube/data/mimic3

├── [576M Jun 8 13:21] mimic3-9k_clas_full.pth

├── [576M May 26 12:00] mimic3-9k_clas_full_r.pth

├── [758K Mar 27 17:59] mimic3-9k_clas_full_vocab.pkl

├── [1.6G Apr 21 18:29] mimic3-9k_dls_clas.pkl

└── [1.6G Apr 21 18:30] mimic3-9k_dls_clas_r.pkl

0 directories, 5 filesAnd this is where we have the finetuned language model:

!tree -shDL 1 {source} -P '*fine*'/home/deb/.xcube/data/mimic3

├── [165M Apr 30 2022] mimic3-9k_lm_finetuned.pth

└── [165M May 7 2022] mimic3-9k_lm_finetuned_r.pth

0 directories, 2 filesAnd this is where we have the bootstrapped brain and the label biases:

!tree -shDL 1 {source_l2r} -P "*tok_lbl_info*|*p_L*"/home/deb/.xcube/data/mimic3_l2r

├── [3.8G Jun 24 2022] mimic3-9k_tok_lbl_info.pkl

└── [ 70K Apr 3 18:35] p_L.pkl

0 directories, 2 filesNext we’ll create a tmp directory to store results. In order for our learner to have access to the finetuned language model we need to symlink to it.

tmp = Path.cwd()/'tmp/models'

tmp.mkdir(exist_ok=True, parents=True)

tmp = tmp.parent

# (tmp/'models'/'mimic3-9k_lm_decoder.pth').symlink_to(source/'mimic3-9k_lm_decoder.pth') # run this just once

# (tmp/'models'/'mimic3-9k_lm_decoder_r.pth').symlink_to(source/'mimic3-9k_lm_decoder_r.pth') # run this just once

# (tmp/'models'/'mimic3-9k_lm_finetuned.pth').symlink_to(source/'mimic3-9k_lm_finetuned.pth') # run this just once

# (tmp/'models'/'mimic3-9k_lm_finetuned_r.pth').symlink_to(source/'mimic3-9k_lm_finetuned_r.pth') # run this just once

# (tmp/'models'/'mimic3-9k_tok_lbl_info.pkl').symlink_to(join_path_file('mimic3-9k_tok_lbl_info', source_l2r, ext='.pkl')) #run this just once

# (tmp/'models'/'p_L.pkl').symlink_to(join_path_file('p_L', source_l2r, ext='.pkl')) #run this just once

# (tmp/'models'/'lin_lambdarank_full.pth').symlink_to(join_path_file('lin_lambdarank_full', source_l2r, ext='.pth')) #run this just once

# list_files(tmp)

!tree -shD {tmp}/home/deb/xcube/nbs/tmp

├── [ 23G Feb 21 13:27] dls_full.pkl

├── [1.7M Feb 10 16:40] dls_tiny.pkl

├── [152M Mar 8 00:28] lin_lambdarank_full.pth

├── [1.0M Mar 7 16:52] lin_lambdarank_tiny.pth

├── [ 10M Apr 21 17:48] mimic3-9k_dls_clas_tiny.pkl

├── [ 10M Apr 21 17:48] mimic3-9k_dls_clas_tiny_r.pkl

├── [4.0K Jun 7 17:39] models

│ ├── [ 56 Apr 17 17:29] lin_lambdarank_full.pth -> /home/deb/.xcube/data/mimic3_l2r/lin_lambdarank_full.pth

│ ├── [ 11K Apr 17 13:17] log.csv

│ ├── [576M Jun 8 02:04] mimic3-9k_clas_full.pth

│ ├── [5.4G May 10 18:21] mimic3-9k_clas_full_predslog.pkl

│ ├── [576M May 26 11:55] mimic3-9k_clas_full_r.pth

│ ├── [758K May 26 11:55] mimic3-9k_clas_full_r_vocab.pkl

│ ├── [264K May 10 18:35] mimic3-9k_clas_full_rank.csv

│ ├── [758K Jun 8 02:04] mimic3-9k_clas_full_vocab.pkl

│ ├── [196M Jun 3 13:21] mimic3-9k_clas_tiny.pth

│ ├── [273M Apr 3 13:19] mimic3-9k_clas_tiny_r.pth

│ ├── [635K Apr 3 13:19] mimic3-9k_clas_tiny_r_vocab.pkl

│ ├── [635K Jun 3 13:21] mimic3-9k_clas_tiny_vocab.pkl

│ ├── [ 53 Jun 7 17:39] mimic3-9k_lm_decoder.pth -> /home/deb/.xcube/data/mimic3/mimic3-9k_lm_decoder.pth

│ ├── [ 55 Jun 7 17:39] mimic3-9k_lm_decoder_r.pth -> /home/deb/.xcube/data/mimic3/mimic3-9k_lm_decoder_r.pth

│ ├── [ 55 Mar 8 16:09] mimic3-9k_lm_finetuned.pth -> /home/deb/.xcube/data/mimic3/mimic3-9k_lm_finetuned.pth

│ ├── [ 57 Mar 19 19:27] mimic3-9k_lm_finetuned_r.pth -> /home/deb/.xcube/data/mimic3/mimic3-9k_lm_finetuned_r.pth

│ ├── [ 59 Mar 29 17:36] mimic3-9k_tok_lbl_info.pkl -> /home/deb/.xcube/data/mimic3_l2r/mimic3-9k_tok_lbl_info.pkl

│ ├── [758K Apr 15 14:29] mimic5_tmp_vocab.pkl

│ └── [ 40 Apr 3 20:03] p_L.pkl -> /home/deb/.xcube/data/mimic3_l2r/p_L.pkl

└── [1.2M Mar 7 16:46] nn_lambdarank_tiny.pth

1 directory, 26 filesLet’s now get the dataloaders for the classifier. We’ll also save classifier with fname.

# fname = 'mimic3-9k_clas_tiny'

fname = 'mimic3-9k_clas_full'if 'tiny' in fname:

dls_clas = torch.load(tmp/'mimic3-9k_dls_clas_tiny.pkl', map_location=default_device())

dls_clas_r = torch.load(tmp/'mimic3-9k_dls_clas_tiny_r.pkl')

# dls_clas.show_batch(max_n=5)

elif 'full' in fname:

dls_clas = torch.load(source/'mimic3-9k_dls_clas.pkl')

dls_clas_r = torch.load(source/'mimic3-9k_dls_clas_r.pkl')

# dls_clas.show_batch(max_n=5)Let’s create the saving callback upfront:

fname_r = fname+'_r'

cbs=SaveModelCallback(monitor='valid_precision_at_k', fname=fname, with_opt=True, reset_on_fit=True)

cbs_r=SaveModelCallback(monitor='valid_precision_at_k', fname=fname_r, with_opt=True, reset_on_fit=True)We will make the TextLearner (Here you can use pretrained=False to save time beacuse we are anyway going to load_encoder later which will replace the encoder wgts with the ones we that we have in the fine-tuned LM):

Todo(Deb): Implement TTA - make max_len=None during validation - magnify important tokens

learn = xmltext_classifier_learner(dls_clas, AWD_LSTM, drop_mult=0.1, max_len=None,#72*40,

metrics=partial(precision_at_k, k=15), path=tmp, cbs=cbs,

pretrained=False,

splitter=None,

running_decoder=True).to_fp16()

learn_r = xmltext_classifier_learner(dls_clas_r, AWD_LSTM, drop_mult=0.1, max_len=None,#72*40,

metrics=partial(precision_at_k, k=15), path=tmp, cbs=cbs_r,

pretrained=False,

splitter=None,

running_decoder=True).to_fp16()# for i,g in enumerate(awd_lstm_xclas_split(learn.model)):

# print(f"group: {i}, {g=}")

# print("****")Note: Don’t forget to check k in inattention

A few customizations into fastai’s callbacks:

To tracks metrics on training bactches during an epoch:

# tell `Recorder` to track `train_metrics`

assert learn.cbs[1].__class__ is Recorder

setattr(learn.cbs[1], 'train_metrics', True)

assert learn_r.cbs[1].__class__ is Recorder

setattr(learn_r.cbs[1], 'train_metrics', True)import copy

mets = copy.deepcopy(learn.recorder._valid_mets)

# mets = L(AvgSmoothLoss(), AvgMetric(precision_at_k))

rv = RunvalCallback(mets)

learn.add_cbs(rv)

learn.cbs(#9) [TrainEvalCallback,Recorder,CastToTensor,ProgressCallback,SaveModelCallback,ModelResetter,RNNCallback,MixedPrecision,RunvalCallback]@patch

def after_batch(self: ProgressCallback):

self.pbar.update(self.iter+1)

mets = ('_valid_mets', '_train_mets')[self.training]

self.pbar.comment = ' '.join([f'{met.name} = {met.value.item():.4f}' for met in getattr(self.recorder, mets)])The following line essentially captures the magic of ULMFit’s transfer learning:

learn = learn.load_encoder('mimic3-9k_lm_finetuned')

learn_r = learn_r.load_encoder('mimic3-9k_lm_finetuned_r')# learn = learn.load_brain('mimic3-9k_tok_lbl_info', 'p_L')

# learn_r = learn_r.load_brain('mimic3-9k_tok_lbl_info')sv_idx = learn.cbs.attrgot('__class__').index(SaveModelCallback)

learn.cbs[sv_idx]

with learn.removed_cbs(learn.cbs[sv_idx]):

learn.fit(1, lr=1e-2)| epoch | train_loss | train_precision_at_k | valid_loss | valid_precision_at_k | time |

|---|---|---|---|---|---|

| 0 | 0.012547 | 0.403337 | 0.013126 | 0.470087 | 41:55 |

# os.getpid()

# learn.fit(3, lr=3e-2)| epoch | train_loss | train_precision_at_k | valid_loss | valid_precision_at_k | time |

|---|---|---|---|---|---|

| 0 | 0.010768 | 0.434745 | 0.011315 | 0.491044 | 20:15 |

| 1 | 0.010263 | 0.465117 | 0.011162 | 0.494939 | 20:01 |

Better model found at epoch 0 with valid_precision_at_k value: 0.4910438908659549.

Better model found at epoch 1 with valid_precision_at_k value: 0.4949387109529458.learn = learn.load((learn.path/learn.model_dir)/fname)

validate(learn)# os.getpid()

# sorted(learn.cbs.zipwith(learn.cbs.attrgot('order')), key=lambda tup: tup[1] )class _FakeLearner:

def to_detach(self,b,cpu=True,gather=True):

return to_detach(b,cpu,gather)

_fake_l = _FakeLearner()

def cpupy(t): return t.cpu().numpy() if isinstance(t, Tensor) else t

learn.model = learn.model.to('cuda:0')TODO: - Also print avg text lengths

# import copy

# mets = L(AvgLoss(), AvgMetric(partial(precision_at_k, k=15)))

mets = L(F1ScoreMulti(thresh=0.14, average='macro'), F1ScoreMulti(thresh=0.14, average='micro')) #(learn.recorder._valid_mets)

learn.model.eval()

mets.map(Self.reset())

pbar = progress_bar(learn.dls.valid)

log_file = join_path_file('log', learn.path/learn.model_dir, ext='.csv')

with open(log_file, 'w', newline='') as csvfile:

writer = csv.writer(csvfile)

header = mets.attrgot('name') + L('bs', 'n_lbs', 'mean_nlbs')

writer.writerow(header)

for i, (xb, yb) in enumerate(pbar):

_fake_l.yb = (yb,)

_fake_l.y = yb

_fake_l.pred, *_ = learn.model(xb)

_fake_l.loss = Tensor(learn.loss_func(_fake_l.pred, yb))

for met in mets: met.accumulate(_fake_l)

pbar.comment = ' '.join(mets.attrgot('value').map(str))

yb_nlbs = Tensor(yb.count_nonzero(dim=1)).float().cpu().numpy()

writer.writerow(mets.attrgot('value').map(cpupy) + L(find_bs(yb), yb_nlbs, yb_nlbs.mean()))pd.set_option('display.max_rows', 100)

df = pd.read_csv(log_file)

df# learn.model[1].label_bias.data.min(), learn.model[1].label_bias.data.max()

# nn.init.kaiming_normal_(learn.model[1].label_bias.data.unsqueeze(-1))

# # init_default??

# with torch.no_grad():

# learn.model[1].label_bias.data = learn.lbsbias

# learn.model[1].label_bias.data.min(), learn.model[1].label_bias.data.max()

# learn.model[1].pay_attn.attn.func.f#, learn_r.model[1].pay_attn.attn.func.f

# set_seed(1, reproducible=True)Dev (Ignore)

df_des = pd.read_csv(source/'code_descriptions.csv')learn = learn.load_brain('mimic3-9k_tok_lbl_info')

learn.brain.shapePerforming brainsplant...

Successfull!torch.Size([57376, 1271])L(learn.dls.vocab[0]), L(learn.dls.vocab[1])((#57376) ['xxunk','xxpad','xxbos','xxeos','xxfld','xxrep','xxwrep','xxup','xxmaj','the'...],

(#1271) ['431','507.0','518.81','112.0','287.4','401.9','427.89','600.00','272.4','300.4'...])rnd_code = '733.00' #random.choice(learn.dls.vocab[1]) # '96.04'

des = load_pickle(join_path_file('code_desc', source, ext='.pkl'))

k=20

idx, *_ = dls_clas.vocab[1].map_objs([rnd_code])

print(f"For the icd code {rnd_code} which means {des[rnd_code]}, the top {k} tokens in the brain are:")

print('\n'.join(L(array(learn.dls.vocab[0])[learn.brain[:, idx].topk(k=k).indices.cpu()], use_list=True)))For the icd code 733.00 which means Osteoporosis, unspecified, the top 20 tokens in the brain are:

osteoporosis

fosamax

alendronate

d3

carbonate

cholecalciferol

70

osteoperosis

actonel

she

her

he

his

vitamin

risedronate

qweek

compression

raloxifene

ms

maleLet’s pull out a text from the batch and take a look at the text and its codes:

xb, yb = dls_clas.one_batch()

i = 3

text = ' '.join([dls_clas.vocab[0][o] for o in xb[3] if o !=1])

codes = dls_clas.tfms[1].decode(yb[3])

print(f"The text is {len(text)} words long and has {len(codes)} codes")The text is 25411 words long and has 36 codesdf_codes = pd.DataFrame(columns=['code', 'description', 'freq', 'top_toks'])

df_codes['code'] = codes

df_codes['description'] = mapt(des.get, codes)

df_codes['freq'] = mapt(lbl_freqs.get, codes)

idxs = learn.dls.vocab[1].map_objs(codes)

top_toks = array(learn.dls.vocab[0])[learn.brain[:, idxs].topk(k=k, dim=0).indices.cpu()].T

top_vals = learn.brain[:, idxs].topk(k=k, dim=0).values.cpu().T

df_codes['top_toks'] = L(top_toks, use_list=True).map(list)

df_codes| code | description | freq | top_toks | |

|---|---|---|---|---|

| 0 | 507.0 | Pneumonitis due to inhalation of food or vomitus | 15 | [aspiration, pneumonia, pneumonitis, pna, flagyl, swallow, peg, sputum, intubation, secretions, levofloxacin, aspirated, suctioning, video, hypoxic, opacities, tube, zosyn, hypoxia, vancomycin] |

| 1 | 518.81 | Acute respiratory failure | 42 | [intubation, intubated, peep, failure, respiratory, fio2, hypoxic, extubation, sputum, pneumonia, sedated, abg, endotracheal, hypercarbic, expired, vent, hypoxia, pco2, aspiration, vancomycin] |

| 2 | 96.04 | Insertion of endotracheal tube | 48 | [intubated, intubation, extubation, surfactant, extubated, endotracheal, respiratory, peep, sedated, airway, ventilator, ventilation, protection, fio2, reintubated, tube, expired, vent, feeds, et] |

| 3 | 96.72 | Continuous mechanical ventilation for 96 consecutive hours or more | 35 | [intubated, tracheostomy, ventilator, trach, vent, intubation, tube, sputum, peg, peep, extubation, ventilation, wean, feeds, secretions, bal, sedated, weaning, fio2, reintubated] |

| 4 | 99.15 | Parenteral infusion of concentrated nutritional substances | 22 | [tpn, nutrition, parenteral, prematurity, phototherapy, feeds, hyperbilirubinemia, immunizations, preterm, circumference, enteral, newborn, pediatrician, infant, caffeine, prenatal, infants, rubella, surfactant, percentile] |

| 5 | 38.93 | Venous catheterization, not elsewhere classified | 77 | [picc, line, vancomycin, central, zosyn, cultures, grew, placement, flagyl, vanco, tpn, septic, recon, placed, flush, levophed, bacteremia, intubated, ij, soln] |

| 6 | 785.52 | Septic shock | 17 | [septic, shock, levophed, pressors, sepsis, hypotension, zosyn, vasopressin, hypotensive, myelos, meropenem, broad, metas, atyps, expired, pressor, spectrum, urosepsis, vancomycin, vanc] |

| 7 | 995.92 | Severe sepsis | 23 | [septic, shock, sepsis, levophed, pressors, hypotension, zosyn, meropenem, expired, vancomycin, myelos, metas, atyps, vanco, bacteremia, vanc, broad, hypotensive, cefepime, spectrum] |

| 8 | 518.0 | Pulmonary collapse | 9 | [collapse, atelectasis, bronchoscopy, bronchus, plugging, plug, lobe, collapsed, bronch, pleural, secretions, spirometry, thoracentesis, incentive, newborn, rubella, infant, immunizations, hemithorax, pediatrician] |

| 9 | 584.9 | Acute renal failure, unspecified | 49 | [renal, arf, failure, cr, prerenal, baseline, creatinine, kidney, medicine, acute, likely, setting, elevated, held, urine, cxr, ed, infant, chf, improved] |

| 10 | 799.02 | Hypoxemia | 8 | [hypoxia, hypoxemia, hypoxic, nrb, cxr, medquist36, invasive, 4l, major, attending, job, brief, medicine, chief, dictated, complaint, 6l, legionella, probnp, procedure] |

| 11 | 427.31 | Atrial fibrillation | 56 | [fibrillation, atrial, afib, amiodarone, coumadin, rvr, fib, warfarin, digoxin, irregular, irregularly, diltiazem, converted, cardioversion, af, anticoagulation, paroxysmal, metoprolol, paf, irreg] |

| 12 | 599.0 | Urinary tract infection, site not specified | 33 | [uti, tract, urinary, nitrofurantoin, coli, ciprofloxacin, urosepsis, urine, sensitivities, ua, tobramycin, organisms, sulbactam, sensitive, meropenem, infection, mic, cipro, ceftazidime, piperacillin] |

| 13 | 285.9 | Anemia, unspecified | 32 | [anemia, newborn, infant, immunizations, rubella, pediatrician, prenatal, circumference, allergies, phototherapy, prematurity, gestation, normocytic, seat, medical, infants, medquist36, invasive, nonreactive, apgars] |

| 14 | 486 | Pneumonia, organism unspecified | 25 | [pneumonia, acquired, pna, levofloxacin, azithromycin, legionella, community, sputum, lobe, infiltrate, opacity, consolidation, productive, rll, opacities, cxr, multifocal, hcap, ceftriaxone, vancomycin] |

| 15 | 038.42 | Septicemia due to escherichia coli [E. coli] | 6 | [coli, escherichia, urosepsis, sulbactam, ecoli, tazo, gnr, tobramycin, septicemia, pyelonephritis, cefazolin, piperacillin, cefuroxime, ceftazidime, interpretative, meropenem, bacteremia, sensitivities, mic, 57] |

| 16 | 733.00 | Osteoporosis, unspecified | 20 | [osteoporosis, fosamax, alendronate, d3, carbonate, cholecalciferol, 70, osteoperosis, actonel, she, her, he, his, vitamin, risedronate, qweek, compression, raloxifene, ms, male] |

| 17 | 591 | Hydronephrosis | 3 | [nephrostomy, ureter, ureteral, hydroureter, hydronephrosis, urology, hydroureteronephrosis, obstructing, pyelonephritis, uropathy, collecting, nephrostogram, urosepsis, upj, ureteropelvic, calculus, perinephric, uvj, stone, hydro] |

| 18 | 276.1 | Hyposmolality and/or hyponatremia | 17 | [hyponatremia, hyponatremic, osmolal, hypovolemic, siadh, restriction, paracentesis, hypervolemic, ascites, cirrhosis, rifaximin, spironolactone, meld, ns, portal, icteric, likely, medicine, encephalopathy, hypovolemia] |

| 19 | 560.1 | Paralytic ileus | 5 | [ileus, kub, loops, flatus, tpn, ngt, distension, illeus, obstruction, sbo, exploratory, laparotomy, clears, decompression, distention, sips, adhesions, npo, ng, passing] |

| 20 | E849.7 | Accidents occurring in residential institution | 9 | [major, medquist36, attending, brief, invasive, job, surgical, procedure, chief, complaint, diagnosis, instructions, followup, pertinent, allergies, 8cm2, daily, dictated, disposition, results] |

| 21 | E870.8 | Accidental cut, puncture, perforation or hemorrhage during other specified medical care | 3 | [recannulate, suboptimald, whistle, trickle, insom, tumeric, erythematosis, advertisement, choledochotomy, disaese, xfusions, ameniable, patrial, intrasinus, reproduceable, adamsts, valgan, interscalene, duodenorrhaphy, strattice] |

| 22 | 788.30 | Urinary incontinence, unspecified | 2 | [incontinence, incontinent, detrol, oxybutynin, urinary, aledronate, incontinance, tolterodine, t34, vesicare, condom, tenders, urinal, thyrodectomy, arthritides, urge, solifenacin, hospitalize, oxybutinin, sparc] |

| 23 | 562.10 | Diverticulosis of colon (without mention of hemorrhage) | 4 | [diverticulosis, sigmoid, cecum, diverticulitis, colonoscopy, diverticula, diverticular, hemorrhoids, diverticuli, polypectomy, colon, colonic, polyp, prep, colonscopy, tagged, lgib, maroon, brbpr, polyps] |

| 24 | E879.6 | Urinary catheterization as the cause of abnormal reaction of patient, or of later complication, without mention of misadventure at time of procedure | 2 | [indwelling, urethra, insufficicency, hyposensitive, nonambulating, urology, methenamine, suprapubic, fosfomycin, neurogenic, utis, mirabilis, sporogenes, mdr, pyuria, urologist, urosepsis, policeman, uti, vse] |

| 25 | 401.1 | Benign essential hypertension | 4 | [diarhea, medquist36, job, feline, ronchi, osteosclerotic, invasive, major, attending, septicemia, brief, dictated, benzodiazepene, unprovoked, caox3, rhinorrhea, metbolic, lak, lext, procedure] |

| 26 | 250.22 | Diabetes mellitustype II [non-insulin dependent type] [NIDDM type] [adult-onset type] or unspecified type with hyperosmolarity, uncontrolled | 2 | [hyperosmolar, nonketotic, hhs, hhnk, ketotic, hyperosmotic, honk, honc, hyperglycemic, flatbush, sniffs, pelvocaliectasis, pseudohyponatremia, ha1c, furosemdie, 25gx1, nistatin, hypersomolar, 05u, guaaic] |

| 27 | 345.80 | Other forms of epilepsy, without mention of intractable epilepsy | 1 | [forefinger, indentified, emu, overshunting, midac, gliosarcoma, foscarnate, signifcantly, mirroring, jamacia, 4tab, t34, fibrinolytic, 3tab, majestic, persecutory, uncomfirmed, polyspike, seperated, hoffmans] |